Honda’s Mobile Mentor

Project Overview

Timeline 8 months

Role UX Designer

Team Alex Holder Product Manager

Alana Levene UX Researcher

Anthony Teo Software Engineer

Vera Li UX Designer

Tools Figma, Midjourney, DI-D, ElevenLabs, Inworld AI, Adobe CC, Unreal Engine

How might we harness technological advancements to create in-vehicle learning experiences?

Our Master of Human-Computer Interaction capstone team is exploring the future of learning in transit, focusing on Gen Z passengers in the year 2030 and beyond. We are working with 99P Labs, which is the research institute for Honda, to design this experience. How will people spend their time in transit once partial autonomy takes care of driving?

PROBLEM

Commute time is perceived as time wasted.

Gen-Z passengers are finding themselves in a transitional place between origin and destination. They have a desire to learn and develop themselves, but often feel constrained about what they can do in the vehicle.

SOLUTION

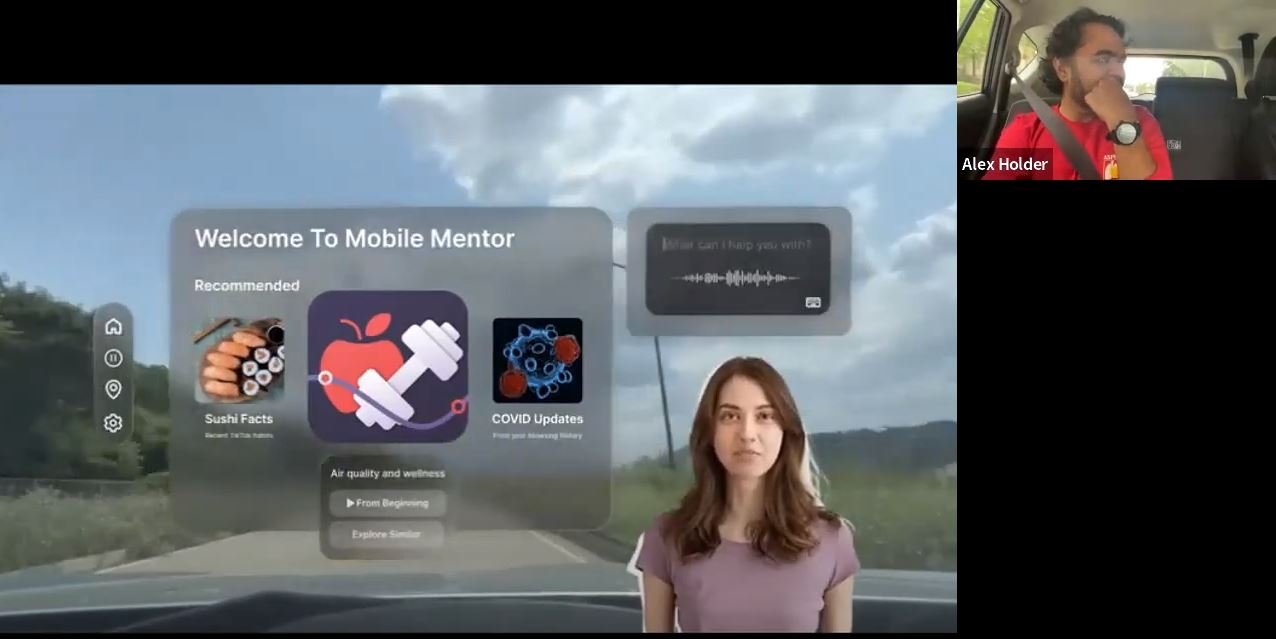

A Mobile Mentor that meets your personal learning goals.

We are designing a conversational agent that will help passengers learn in the vehicle, aided by augmented reality interfaces.

Learn something new or pick up where you left off

01

The Mobile Mentor system uses an AI avatar as a conversational agent. Augmented reality interfaces are projected in the vehicle, providing visual aid for navigation and education. The Mobile Mentor detects the passenger’s emotional state and recommends topics that are interesting to the passenger.

Dive deeper into a learning topic that sparks your curiosity

02

The interface allows the passenger to learn more about a topic, ask for clarity on the existing topic, and change the topic. Control can be inputted through either voice or touch.

Reflect on what you learned during your car ride

03

The Mobile Mentor can summarize what you learned, while also pointing out key milestones you hit in your educational goals. This allows the passenger to reinforce their learning and find their time in the car meaningful.

RESEARCH

We conducted 4 different research activities to understand our domain and primary users

Synthesis

We performed affinity diagramming sessions where we grouped similar ideas and concepts together, and came up with the overall theme for each cluster. The goal with our synthesis was to identify insights across the various primary and secondary research activities we had conducted.

Our synthesis led us to three main features of the current state of transit, and how they will evolve in the future to reflect the wants and needs of Gen Z.

01 Make learning personalized.

Through our conversations with Gen Z about learning, we found that although they associate the term with formal learning, non-formal and informal learning, through Tiktoks and reels, are more exciting to them.

Because these less formal learning methods allow for personalization and control, Gen Z actively seek out and engage in activities that excite them, and this does not feel like a burden. This need for control comes from a combination of formal learning avenues not satisfying their interests and the abundance of content available in this digital age.

Focusing on having personalized learning content would enable Gen Z to engage in more fulfilling activities in transit, however, our research also showed that current resources make it difficult to do so.

02 Make learning supportive.

There are a lot of other constraints when it comes to learning in cars. Productivity during short trips vs. long trips could be very different. In addition to that, the car is a very confined space. There is no desk in car for you to put your laptop or books on. You can’t really stretch your legs and move around when you want to change your positions while working in the car.

Finally, people have limited cognitive capacity, and the existing car technology can make it harder for them to process more information or learn effectively. To be productive in the car, people have to plan ahead, prioritize their tasks, and choose the right tools and strategies to make the most of their time.

Gen Z current doesn’t see the car as an ideal learning environment due to the constraints mentioned above, but that might change if we could address some of these concerns.

03 Make learning easy.

To facilitate learning during travel, it's crucial to maintain a low cognitive load. When we try to learn in difficult travel conditions, we risk cognitive overload and difficulty in focusing.

However, in 2030, partially autonomous vehicles will shoulder the burden of navigation and weather unpredictability. This will reduce the mental load of travel, allowing passengers to engage in other tasks like learning.

Advancements in AI and machine learning can curate personalized learning opportunities, making complex subjects digestible and personalized to the learner.

By integrating these tech advancements, we can create an environment where both travel and learning conditions are low-effort. This transformation can make travel time a productive learning experience for Gen Z.

DESIGN

With 5 Iterations, we grew increasingly immersive with each step

Iteration 01

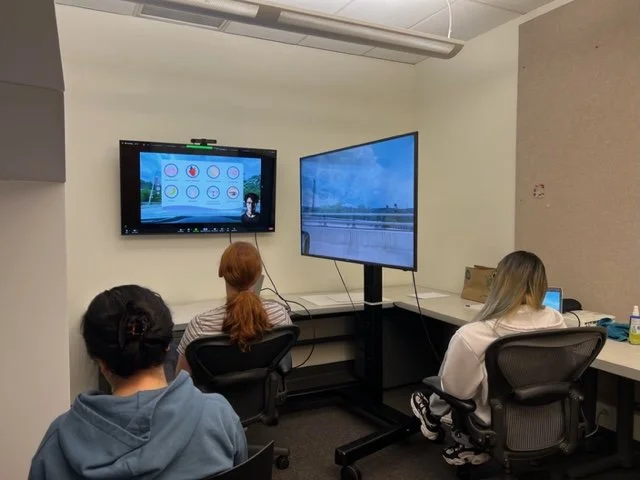

Based on the insights we found in our research, we built a cardboard prototype and asked participants what features they envision for their ideal Mobile Mentor. From these responses, we found that the participants would like a Mobile Mentor that would engage with multiple senses and provide experiences based on different mood states.

Iteration 02

We built a higher quality foam core prototype and designed two “Wizard of Oz” experiences, one that involves learning in an upright environment and another one in a more relaxed and prone environment. We found that participants liked the upright environment more and preferred the conversational element with visual aids, so we decide to focus on this experience further.

Iteration 03

From here, we refined our conversational scripts and designed visual aids in Figma. Our teammate helped Wizard of Oz the conversational element of the Mobile Mentor. Honda generously provided a pre-existing foam bus model to simulate a more realistic environment. We found that passengers desired realistic human avatars to guide their learning journey.

Iteration 04

At this stage, we increased the visual fidelity of the Mobile Mentor. I led the design of the conversational avatar, using the latest AI tools such as Midjourney, ChatGPT, DI-D and ElevenLabs to create a more realistic experience. I uncovered exciting new technology in creating our prototype, with some tools released a few months before our project.

Iteration 05

We took our prototype to another level and simulated the experience inside a moving car with our participants. We realized that the movement of the car created barriers to focusing on the interface and that passengers prefer more immersive experiences in the front and less intrusive experiences on the windows, which we will address in our next iteration.

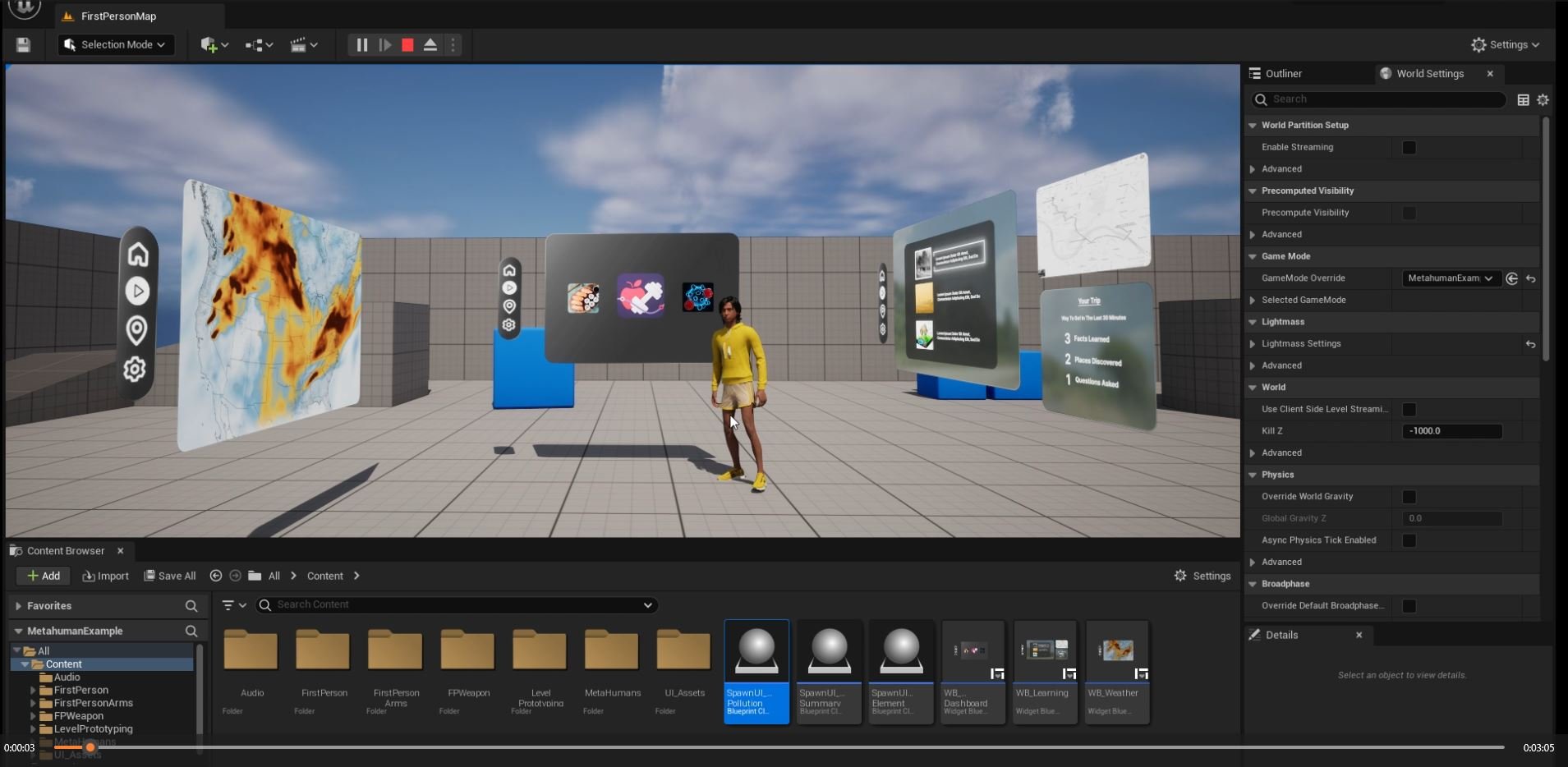

Adding the Screens

We brought our Figma screens into Unreal’s content browser. Using widgets and blueprints in Unreal Engine, we are able to bring our screens into 3D space.

Visual Scripting

We used nodes, which are pre-written blocks of C++ code, to create interactions on the buttons and screens

Designing an Immersive Experience

For our final iteration, we used Unreal Engine to simulate an augmented reality experience. We used cutting edge technology such as Unreal’s Metahumans and an external tool from Inworld AI to simulate a more natural conversational experience.

Designing the Avatar

We used the Metahuman plugin in Unreal to create a 3D character that is fully modeled, rigged, and ready to animate. We used Inworld AI, an external tool that provides an API token allowing the avatar to speak in a Chat GPT-like conversation.

Added Elements

Since our experience primarily engages the audio and visual senses, we also added other immersive elements like ambient music in the experience.